Site blocked

-This site is on your blocklist:

-

-

-

-Too much image

- on 2015-01-25 12:32:11

-  ](https://play.google.com/store/apps/details?id=in.canews.zeronetmobile)

+- Скачать APK: https://github.com/canewsin/zeronet_mobile/releases

-* [`layman -a raiagent`](https://github.com/leycec/raiagent)

-* `echo '>=net-vpn/zeronet-0.5.4' >> /etc/portage/package.accept_keywords`

-* *(Опционально)* Включить поддержку Tor: `echo 'net-vpn/zeronet tor' >>

- /etc/portage/package.use`

-* `emerge zeronet`

-* `rc-service zeronet start`

-* Откройте http://127.0.0.1:43110/ в вашем браузере.

+### Android (arm, arm64, x86) Облегчённый клиент только для просмотра (1МБ)

-Смотрите `/usr/share/doc/zeronet-*/README.gentoo.bz2` для дальнейшей помощи.

+- Для работы требуется Android как минимум версии 4.1 Jelly Bean

+- [

](https://play.google.com/store/apps/details?id=in.canews.zeronetmobile)

+- Скачать APK: https://github.com/canewsin/zeronet_mobile/releases

-* [`layman -a raiagent`](https://github.com/leycec/raiagent)

-* `echo '>=net-vpn/zeronet-0.5.4' >> /etc/portage/package.accept_keywords`

-* *(Опционально)* Включить поддержку Tor: `echo 'net-vpn/zeronet tor' >>

- /etc/portage/package.use`

-* `emerge zeronet`

-* `rc-service zeronet start`

-* Откройте http://127.0.0.1:43110/ в вашем браузере.

+### Android (arm, arm64, x86) Облегчённый клиент только для просмотра (1МБ)

-Смотрите `/usr/share/doc/zeronet-*/README.gentoo.bz2` для дальнейшей помощи.

+- Для работы требуется Android как минимум версии 4.1 Jelly Bean

+- [ ](https://play.google.com/store/apps/details?id=dev.zeronetx.app.lite)

-### [FreeBSD](https://www.freebsd.org/)

+### Установка из исходного кода

-* `pkg install zeronet` or `cd /usr/ports/security/zeronet/ && make install clean`

-* `sysrc zeronet_enable="YES"`

-* `service zeronet start`

-* Откройте http://127.0.0.1:43110/ в вашем браузере.

-

-### [Vagrant](https://www.vagrantup.com/)

-

-* `vagrant up`

-* Подключитесь к VM с помощью `vagrant ssh`

-* `cd /vagrant`

-* Запустите `python2 zeronet.py --ui_ip 0.0.0.0`

-* Откройте http://127.0.0.1:43110/ в вашем браузере.

-

-### [Docker](https://www.docker.com/)

-* `docker run -d -v

](https://play.google.com/store/apps/details?id=dev.zeronetx.app.lite)

-### [FreeBSD](https://www.freebsd.org/)

+### Установка из исходного кода

-* `pkg install zeronet` or `cd /usr/ports/security/zeronet/ && make install clean`

-* `sysrc zeronet_enable="YES"`

-* `service zeronet start`

-* Откройте http://127.0.0.1:43110/ в вашем браузере.

-

-### [Vagrant](https://www.vagrantup.com/)

-

-* `vagrant up`

-* Подключитесь к VM с помощью `vagrant ssh`

-* `cd /vagrant`

-* Запустите `python2 zeronet.py --ui_ip 0.0.0.0`

-* Откройте http://127.0.0.1:43110/ в вашем браузере.

-

-### [Docker](https://www.docker.com/)

-* `docker run -d -v  ](https://play.google.com/store/apps/details?id=in.canews.zeronetmobile)

+ - APK download: https://github.com/canewsin/zeronet_mobile/releases

+

+### Android (arm, arm64, x86) Thin Client for Preview Only (Size 1MB)

+ - minimum Android version supported 16 (JellyBean)

+ - [

](https://play.google.com/store/apps/details?id=in.canews.zeronetmobile)

+ - APK download: https://github.com/canewsin/zeronet_mobile/releases

+

+### Android (arm, arm64, x86) Thin Client for Preview Only (Size 1MB)

+ - minimum Android version supported 16 (JellyBean)

+ - [ ](https://play.google.com/store/apps/details?id=dev.zeronetx.app.lite)

+

## 现有限制

-* ~~没有类似于 torrent 的文件拆分来支持大文件~~ (已添加大文件支持)

-* ~~没有比 BitTorrent 更好的匿名性~~ (已添加内置的完整 Tor 支持)

-* 传输文件时没有压缩~~和加密~~ (已添加 TLS 支持)

+* 传输文件时没有压缩

* 不支持私有站点

## 如何创建一个 ZeroNet 站点?

- * 点击 [ZeroHello](http://127.0.0.1:43110/1HeLLo4uzjaLetFx6NH3PMwFP3qbRbTf3D) 站点的 **⋮** > **「新建空站点」** 菜单项

+ * 点击 [ZeroHello](http://127.0.0.1:43110/1HELLoE3sFD9569CLCbHEAVqvqV7U2Ri9d) 站点的 **⋮** > **「新建空站点」** 菜单项

* 您将被**重定向**到一个全新的站点,该站点只能由您修改

* 您可以在 **data/[您的站点地址]** 目录中找到并修改网站的内容

* 修改后打开您的网站,将右上角的「0」按钮拖到左侧,然后点击底部的**签名**并**发布**按钮

-接下来的步骤:[ZeroNet 开发者文档](https://zeronet.io/docs/site_development/getting_started/)

+接下来的步骤:[ZeroNet 开发者文档](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/site_development/getting_started/)

## 帮助这个项目

+- Bitcoin: 1ZeroNetyV5mKY9JF1gsm82TuBXHpfdLX (Preferred)

+- LiberaPay: https://liberapay.com/PramUkesh

+- Paypal: https://paypal.me/PramUkesh

+- Others: [Donate](!https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/help_zeronet/donate/#help-to-keep-zeronet-development-alive)

-- Bitcoin: 1QDhxQ6PraUZa21ET5fYUCPgdrwBomnFgX

-- Paypal: https://zeronet.io/docs/help_zeronet/donate/

-

-### 赞助商

-

-* [BrowserStack.com](https://www.browserstack.com) 使更好的 macOS/Safari 兼容性成为可能

#### 感谢您!

-* 更多信息,帮助,变更记录和 zeronet 站点:https://www.reddit.com/r/zeronet/

-* 前往 [#zeronet @ FreeNode](https://kiwiirc.com/client/irc.freenode.net/zeronet) 或 [gitter](https://gitter.im/HelloZeroNet/ZeroNet) 和我们聊天

-* [这里](https://gitter.im/ZeroNet-zh/Lobby)是一个 gitter 上的中文聊天室

-* Email: hello@zeronet.io (PGP: [960F FF2D 6C14 5AA6 13E8 491B 5B63 BAE6 CB96 13AE](https://zeronet.io/files/tamas@zeronet.io_pub.asc))

+* 更多信息,帮助,变更记录和 zeronet 站点:https://www.reddit.com/r/zeronetx/

+* 前往 [#zeronet @ FreeNode](https://kiwiirc.com/client/irc.freenode.net/zeronet) 或 [gitter](https://gitter.im/canewsin/ZeroNet) 和我们聊天

+* [这里](https://gitter.im/canewsin/ZeroNet)是一个 gitter 上的中文聊天室

+* Email: canews.in@gmail.com

diff --git a/README.md b/README.md

index d8e36a717..e5c2bccc1 100644

--- a/README.md

+++ b/README.md

@@ -1,6 +1,6 @@

-# ZeroNet [](https://travis-ci.org/HelloZeroNet/ZeroNet) [](https://zeronet.io/docs/faq/) [](https://zeronet.io/docs/help_zeronet/donate/)  [](https://hub.docker.com/r/nofish/zeronet)

-

-Decentralized websites using Bitcoin crypto and the BitTorrent network - https://zeronet.io / [onion](http://zeronet34m3r5ngdu54uj57dcafpgdjhxsgq5kla5con4qvcmfzpvhad.onion)

+# ZeroNet [](https://github.com/ZeroNetX/ZeroNet/actions/workflows/tests.yml) [](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/faq/) [](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/help_zeronet/donate/) [](https://hub.docker.com/r/canewsin/zeronet)

+

+Decentralized websites using Bitcoin crypto and the BitTorrent network - https://zeronet.dev / [ZeroNet Site](http://127.0.0.1:43110/1ZeroNetyV5mKY9JF1gsm82TuBXHpfdLX/), Unlike Bitcoin, ZeroNet Doesn't need a blockchain to run, But uses cryptography used by BTC, to ensure data integrity and validation.

## Why?

@@ -22,7 +22,9 @@ Decentralized websites using Bitcoin crypto and the BitTorrent network - https:/

* Password-less [BIP32](https://github.com/bitcoin/bips/blob/master/bip-0032.mediawiki)

based authorization: Your account is protected by the same cryptography as your Bitcoin wallet

* Built-in SQL server with P2P data synchronization: Allows easier site development and faster page load times

- * Anonymity: Full Tor network support with .onion hidden services instead of IPv4 addresses

+ * Anonymity:

+ * Full Tor network support with .onion hidden services instead of IPv4 addresses

+ * Full I2P network support with I2P Destinations instead of IPv4 addresses

* TLS encrypted connections

* Automatic uPnP port opening

* Plugin for multiuser (openproxy) support

@@ -33,22 +35,22 @@ Decentralized websites using Bitcoin crypto and the BitTorrent network - https:/

* After starting `zeronet.py` you will be able to visit zeronet sites using

`http://127.0.0.1:43110/{zeronet_address}` (eg.

- `http://127.0.0.1:43110/1HeLLo4uzjaLetFx6NH3PMwFP3qbRbTf3D`).

+ `http://127.0.0.1:43110/1HELLoE3sFD9569CLCbHEAVqvqV7U2Ri9d`).

* When you visit a new zeronet site, it tries to find peers using the BitTorrent

network so it can download the site files (html, css, js...) from them.

* Each visited site is also served by you.

* Every site contains a `content.json` file which holds all other files in a sha512 hash

and a signature generated using the site's private key.

* If the site owner (who has the private key for the site address) modifies the

- site, then he/she signs the new `content.json` and publishes it to the peers.

+ site and signs the new `content.json` and publishes it to the peers.

Afterwards, the peers verify the `content.json` integrity (using the

signature), they download the modified files and publish the new content to

other peers.

#### [Slideshow about ZeroNet cryptography, site updates, multi-user sites »](https://docs.google.com/presentation/d/1_2qK1IuOKJ51pgBvllZ9Yu7Au2l551t3XBgyTSvilew/pub?start=false&loop=false&delayms=3000)

-#### [Frequently asked questions »](https://zeronet.io/docs/faq/)

+#### [Frequently asked questions »](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/faq/)

-#### [ZeroNet Developer Documentation »](https://zeronet.io/docs/site_development/getting_started/)

+#### [ZeroNet Developer Documentation »](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/site_development/getting_started/)

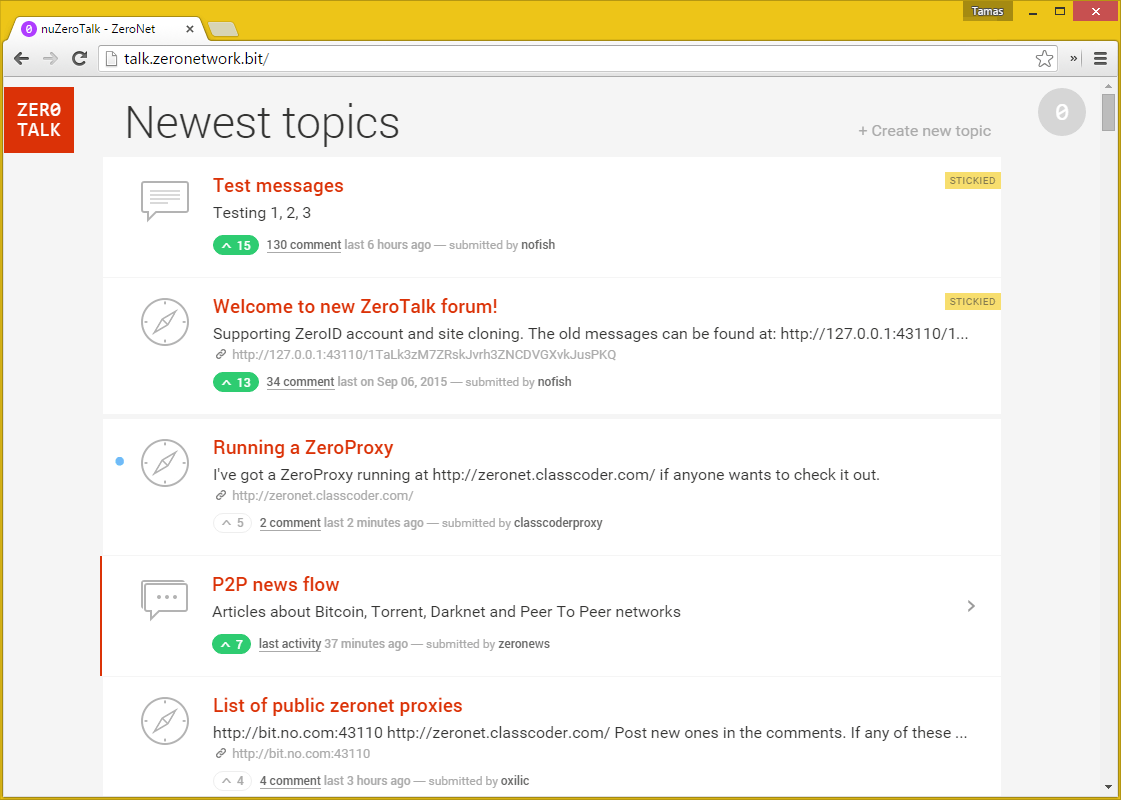

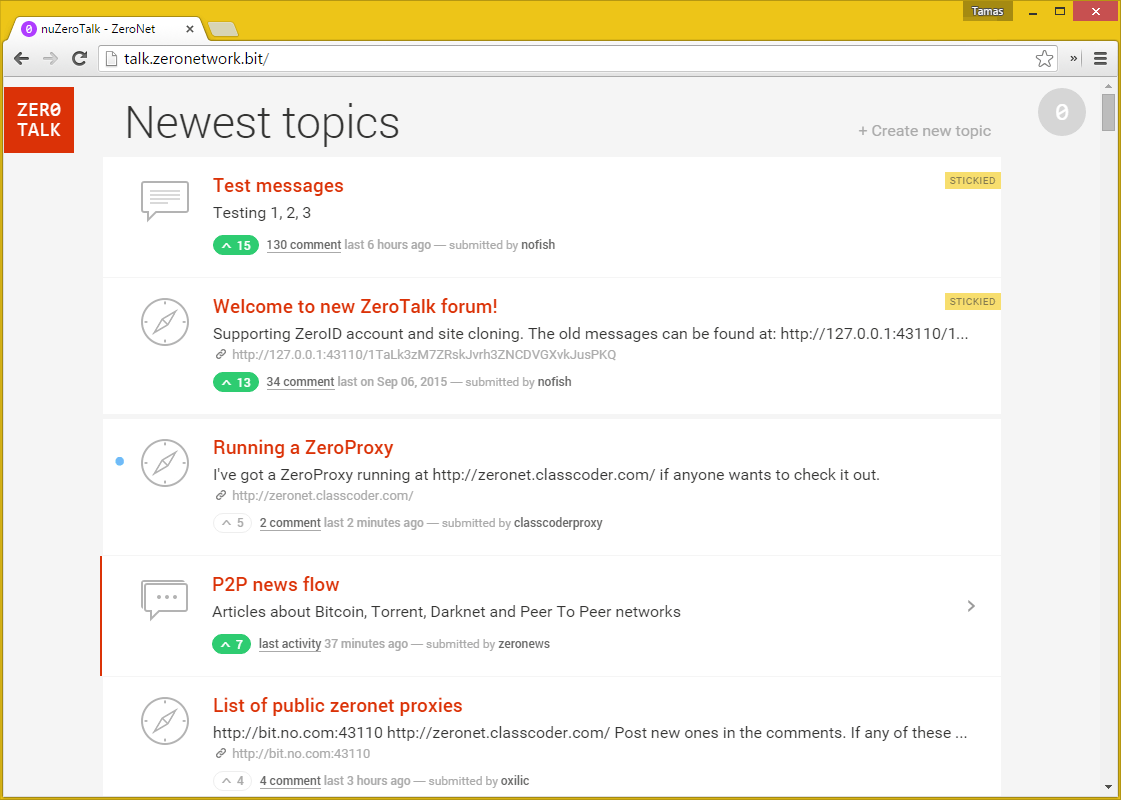

## Screenshots

@@ -56,82 +58,108 @@ Decentralized websites using Bitcoin crypto and the BitTorrent network - https:/

-#### [More screenshots in ZeroNet docs »](https://zeronet.io/docs/using_zeronet/sample_sites/)

+#### [More screenshots in ZeroNet docs »](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/using_zeronet/sample_sites/)

## How to join

### Windows

- - Download [ZeroNet-py3-win64.zip](https://github.com/HelloZeroNet/ZeroNet-win/archive/dist-win64/ZeroNet-py3-win64.zip) (18MB)

+ - Download [ZeroNet-win.zip](https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-win.zip) (26MB)

- Unpack anywhere

- Run `ZeroNet.exe`

### macOS

- - Download [ZeroNet-dist-mac.zip](https://github.com/HelloZeroNet/ZeroNet-dist/archive/mac/ZeroNet-dist-mac.zip) (13.2MB)

+ - Download [ZeroNet-mac.zip](https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-mac.zip) (14MB)

- Unpack anywhere

- Run `ZeroNet.app`

### Linux (x86-64bit)

- - `wget https://github.com/HelloZeroNet/ZeroNet-linux/archive/dist-linux64/ZeroNet-py3-linux64.tar.gz`

- - `tar xvpfz ZeroNet-py3-linux64.tar.gz`

- - `cd ZeroNet-linux-dist-linux64/`

+ - `wget https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-linux.zip`

+ - `unzip ZeroNet-linux.zip`

+ - `cd ZeroNet-linux`

- Start with: `./ZeroNet.sh`

- Open the ZeroHello landing page in your browser by navigating to: http://127.0.0.1:43110/

__Tip:__ Start with `./ZeroNet.sh --ui_ip '*' --ui_restrict your.ip.address` to allow remote connections on the web interface.

### Android (arm, arm64, x86)

- - minimum Android version supported 16 (JellyBean)

+ - minimum Android version supported 21 (Android 5.0 Lollipop)

- [

](https://play.google.com/store/apps/details?id=dev.zeronetx.app.lite)

+

## 现有限制

-* ~~没有类似于 torrent 的文件拆分来支持大文件~~ (已添加大文件支持)

-* ~~没有比 BitTorrent 更好的匿名性~~ (已添加内置的完整 Tor 支持)

-* 传输文件时没有压缩~~和加密~~ (已添加 TLS 支持)

+* 传输文件时没有压缩

* 不支持私有站点

## 如何创建一个 ZeroNet 站点?

- * 点击 [ZeroHello](http://127.0.0.1:43110/1HeLLo4uzjaLetFx6NH3PMwFP3qbRbTf3D) 站点的 **⋮** > **「新建空站点」** 菜单项

+ * 点击 [ZeroHello](http://127.0.0.1:43110/1HELLoE3sFD9569CLCbHEAVqvqV7U2Ri9d) 站点的 **⋮** > **「新建空站点」** 菜单项

* 您将被**重定向**到一个全新的站点,该站点只能由您修改

* 您可以在 **data/[您的站点地址]** 目录中找到并修改网站的内容

* 修改后打开您的网站,将右上角的「0」按钮拖到左侧,然后点击底部的**签名**并**发布**按钮

-接下来的步骤:[ZeroNet 开发者文档](https://zeronet.io/docs/site_development/getting_started/)

+接下来的步骤:[ZeroNet 开发者文档](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/site_development/getting_started/)

## 帮助这个项目

+- Bitcoin: 1ZeroNetyV5mKY9JF1gsm82TuBXHpfdLX (Preferred)

+- LiberaPay: https://liberapay.com/PramUkesh

+- Paypal: https://paypal.me/PramUkesh

+- Others: [Donate](!https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/help_zeronet/donate/#help-to-keep-zeronet-development-alive)

-- Bitcoin: 1QDhxQ6PraUZa21ET5fYUCPgdrwBomnFgX

-- Paypal: https://zeronet.io/docs/help_zeronet/donate/

-

-### 赞助商

-

-* [BrowserStack.com](https://www.browserstack.com) 使更好的 macOS/Safari 兼容性成为可能

#### 感谢您!

-* 更多信息,帮助,变更记录和 zeronet 站点:https://www.reddit.com/r/zeronet/

-* 前往 [#zeronet @ FreeNode](https://kiwiirc.com/client/irc.freenode.net/zeronet) 或 [gitter](https://gitter.im/HelloZeroNet/ZeroNet) 和我们聊天

-* [这里](https://gitter.im/ZeroNet-zh/Lobby)是一个 gitter 上的中文聊天室

-* Email: hello@zeronet.io (PGP: [960F FF2D 6C14 5AA6 13E8 491B 5B63 BAE6 CB96 13AE](https://zeronet.io/files/tamas@zeronet.io_pub.asc))

+* 更多信息,帮助,变更记录和 zeronet 站点:https://www.reddit.com/r/zeronetx/

+* 前往 [#zeronet @ FreeNode](https://kiwiirc.com/client/irc.freenode.net/zeronet) 或 [gitter](https://gitter.im/canewsin/ZeroNet) 和我们聊天

+* [这里](https://gitter.im/canewsin/ZeroNet)是一个 gitter 上的中文聊天室

+* Email: canews.in@gmail.com

diff --git a/README.md b/README.md

index d8e36a717..e5c2bccc1 100644

--- a/README.md

+++ b/README.md

@@ -1,6 +1,6 @@

-# ZeroNet [](https://travis-ci.org/HelloZeroNet/ZeroNet) [](https://zeronet.io/docs/faq/) [](https://zeronet.io/docs/help_zeronet/donate/)  [](https://hub.docker.com/r/nofish/zeronet)

-

-Decentralized websites using Bitcoin crypto and the BitTorrent network - https://zeronet.io / [onion](http://zeronet34m3r5ngdu54uj57dcafpgdjhxsgq5kla5con4qvcmfzpvhad.onion)

+# ZeroNet [](https://github.com/ZeroNetX/ZeroNet/actions/workflows/tests.yml) [](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/faq/) [](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/help_zeronet/donate/) [](https://hub.docker.com/r/canewsin/zeronet)

+

+Decentralized websites using Bitcoin crypto and the BitTorrent network - https://zeronet.dev / [ZeroNet Site](http://127.0.0.1:43110/1ZeroNetyV5mKY9JF1gsm82TuBXHpfdLX/), Unlike Bitcoin, ZeroNet Doesn't need a blockchain to run, But uses cryptography used by BTC, to ensure data integrity and validation.

## Why?

@@ -22,7 +22,9 @@ Decentralized websites using Bitcoin crypto and the BitTorrent network - https:/

* Password-less [BIP32](https://github.com/bitcoin/bips/blob/master/bip-0032.mediawiki)

based authorization: Your account is protected by the same cryptography as your Bitcoin wallet

* Built-in SQL server with P2P data synchronization: Allows easier site development and faster page load times

- * Anonymity: Full Tor network support with .onion hidden services instead of IPv4 addresses

+ * Anonymity:

+ * Full Tor network support with .onion hidden services instead of IPv4 addresses

+ * Full I2P network support with I2P Destinations instead of IPv4 addresses

* TLS encrypted connections

* Automatic uPnP port opening

* Plugin for multiuser (openproxy) support

@@ -33,22 +35,22 @@ Decentralized websites using Bitcoin crypto and the BitTorrent network - https:/

* After starting `zeronet.py` you will be able to visit zeronet sites using

`http://127.0.0.1:43110/{zeronet_address}` (eg.

- `http://127.0.0.1:43110/1HeLLo4uzjaLetFx6NH3PMwFP3qbRbTf3D`).

+ `http://127.0.0.1:43110/1HELLoE3sFD9569CLCbHEAVqvqV7U2Ri9d`).

* When you visit a new zeronet site, it tries to find peers using the BitTorrent

network so it can download the site files (html, css, js...) from them.

* Each visited site is also served by you.

* Every site contains a `content.json` file which holds all other files in a sha512 hash

and a signature generated using the site's private key.

* If the site owner (who has the private key for the site address) modifies the

- site, then he/she signs the new `content.json` and publishes it to the peers.

+ site and signs the new `content.json` and publishes it to the peers.

Afterwards, the peers verify the `content.json` integrity (using the

signature), they download the modified files and publish the new content to

other peers.

#### [Slideshow about ZeroNet cryptography, site updates, multi-user sites »](https://docs.google.com/presentation/d/1_2qK1IuOKJ51pgBvllZ9Yu7Au2l551t3XBgyTSvilew/pub?start=false&loop=false&delayms=3000)

-#### [Frequently asked questions »](https://zeronet.io/docs/faq/)

+#### [Frequently asked questions »](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/faq/)

-#### [ZeroNet Developer Documentation »](https://zeronet.io/docs/site_development/getting_started/)

+#### [ZeroNet Developer Documentation »](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/site_development/getting_started/)

## Screenshots

@@ -56,82 +58,108 @@ Decentralized websites using Bitcoin crypto and the BitTorrent network - https:/

-#### [More screenshots in ZeroNet docs »](https://zeronet.io/docs/using_zeronet/sample_sites/)

+#### [More screenshots in ZeroNet docs »](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/using_zeronet/sample_sites/)

## How to join

### Windows

- - Download [ZeroNet-py3-win64.zip](https://github.com/HelloZeroNet/ZeroNet-win/archive/dist-win64/ZeroNet-py3-win64.zip) (18MB)

+ - Download [ZeroNet-win.zip](https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-win.zip) (26MB)

- Unpack anywhere

- Run `ZeroNet.exe`

### macOS

- - Download [ZeroNet-dist-mac.zip](https://github.com/HelloZeroNet/ZeroNet-dist/archive/mac/ZeroNet-dist-mac.zip) (13.2MB)

+ - Download [ZeroNet-mac.zip](https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-mac.zip) (14MB)

- Unpack anywhere

- Run `ZeroNet.app`

### Linux (x86-64bit)

- - `wget https://github.com/HelloZeroNet/ZeroNet-linux/archive/dist-linux64/ZeroNet-py3-linux64.tar.gz`

- - `tar xvpfz ZeroNet-py3-linux64.tar.gz`

- - `cd ZeroNet-linux-dist-linux64/`

+ - `wget https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-linux.zip`

+ - `unzip ZeroNet-linux.zip`

+ - `cd ZeroNet-linux`

- Start with: `./ZeroNet.sh`

- Open the ZeroHello landing page in your browser by navigating to: http://127.0.0.1:43110/

__Tip:__ Start with `./ZeroNet.sh --ui_ip '*' --ui_restrict your.ip.address` to allow remote connections on the web interface.

### Android (arm, arm64, x86)

- - minimum Android version supported 16 (JellyBean)

+ - minimum Android version supported 21 (Android 5.0 Lollipop)

- [ ](https://play.google.com/store/apps/details?id=in.canews.zeronetmobile)

- APK download: https://github.com/canewsin/zeronet_mobile/releases

- - XDA Labs: https://labs.xda-developers.com/store/app/in.canews.zeronet

-

+

+### Android (arm, arm64, x86) Thin Client for Preview Only (Size 1MB)

+ - minimum Android version supported 16 (JellyBean)

+ - [

](https://play.google.com/store/apps/details?id=in.canews.zeronetmobile)

- APK download: https://github.com/canewsin/zeronet_mobile/releases

- - XDA Labs: https://labs.xda-developers.com/store/app/in.canews.zeronet

-

+

+### Android (arm, arm64, x86) Thin Client for Preview Only (Size 1MB)

+ - minimum Android version supported 16 (JellyBean)

+ - [ ](https://play.google.com/store/apps/details?id=dev.zeronetx.app.lite)

+

+

#### Docker

-There is an official image, built from source at: https://hub.docker.com/r/nofish/zeronet/

+There is an official image, built from source at: https://hub.docker.com/r/canewsin/zeronet/

-### Install from source

+### Online Proxies

+Proxies are like seed boxes for sites(i.e ZNX runs on a cloud vps), you can try zeronet experience from proxies. Add your proxy below if you have one.

+

+

+

+#### From Community

+

+https://0net-preview.com/

+

+

+### Install from source

+ - `wget https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-src.zip`

+ - `sudo apt install unzip && unzip ZeroNet-src.zip`

+ - `cd ZeroNet`

- `sudo apt-get update`

- `sudo apt-get install python3-pip`

- `sudo python3 -m pip install -r requirements.txt`

+ - > Above command should output "Successfully installed {Package Names Here...}" without any errors, Incase of any errors try this command for required dependencies

+ >

+ > `sudo apt install git autoconf pkg-config libffi-dev python3-pip python3-venv python3-dev build-essential libtool`

+ >

+ > and rerun `sudo python3 -m pip install -r requirements.txt`

- Start with: `python3 zeronet.py`

- Open the ZeroHello landing page in your browser by navigating to: http://127.0.0.1:43110/

+

## Current limitations

-* ~~No torrent-like file splitting for big file support~~ (big file support added)

-* ~~No more anonymous than Bittorrent~~ (built-in full Tor support added)

-* File transactions are not compressed ~~or encrypted yet~~ (TLS encryption added)

+* File transactions are not compressed

* No private sites

-

+* ~~No more anonymous than Bittorrent~~ (built-in full Tor and I2P support added)

## How can I create a ZeroNet site?

- * Click on **⋮** > **"Create new, empty site"** menu item on the site [ZeroHello](http://127.0.0.1:43110/1HeLLo4uzjaLetFx6NH3PMwFP3qbRbTf3D).

+ * Click on **⋮** > **"Create new, empty site"** menu item on the site [ZeroHello](http://127.0.0.1:43110/1HELLoE3sFD9569CLCbHEAVqvqV7U2Ri9d).

* You will be **redirected** to a completely new site that is only modifiable by you!

* You can find and modify your site's content in **data/[yoursiteaddress]** directory

* After the modifications open your site, drag the topright "0" button to left, then press **sign** and **publish** buttons on the bottom

-Next steps: [ZeroNet Developer Documentation](https://zeronet.io/docs/site_development/getting_started/)

+Next steps: [ZeroNet Developer Documentation](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/site_development/getting_started/)

## Help keep this project alive

-

-- Bitcoin: 1QDhxQ6PraUZa21ET5fYUCPgdrwBomnFgX

-- Paypal: https://zeronet.io/docs/help_zeronet/donate/

-

-### Sponsors

-

-* Better macOS/Safari compatibility made possible by [BrowserStack.com](https://www.browserstack.com)

+- Bitcoin: 1ZeroNetyV5mKY9JF1gsm82TuBXHpfdLX (Preferred)

+- LiberaPay: https://liberapay.com/PramUkesh

+- Paypal: https://paypal.me/PramUkesh

+- Others: [Donate](!https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/help_zeronet/donate/#help-to-keep-zeronet-development-alive)

#### Thank you!

-* More info, help, changelog, zeronet sites: https://www.reddit.com/r/zeronet/

-* Come, chat with us: [#zeronet @ FreeNode](https://kiwiirc.com/client/irc.freenode.net/zeronet) or on [gitter](https://gitter.im/HelloZeroNet/ZeroNet)

-* Email: hello@zeronet.io (PGP: [960F FF2D 6C14 5AA6 13E8 491B 5B63 BAE6 CB96 13AE](https://zeronet.io/files/tamas@zeronet.io_pub.asc))

+* More info, help, changelog, zeronet sites: https://www.reddit.com/r/zeronetx/

+* Come, chat with us: [#zeronet @ FreeNode](https://kiwiirc.com/client/irc.freenode.net/zeronet) or on [gitter](https://gitter.im/canewsin/ZeroNet)

+* Email: canews.in@gmail.com

diff --git a/Vagrantfile b/Vagrantfile

index 24fe0c45f..10a11c58a 100644

--- a/Vagrantfile

+++ b/Vagrantfile

@@ -40,6 +40,6 @@ Vagrant.configure(VAGRANTFILE_API_VERSION) do |config|

config.vm.provision "shell",

inline: "sudo apt-get install msgpack-python python-gevent python-pip python-dev -y"

config.vm.provision "shell",

- inline: "sudo pip install msgpack --upgrade"

+ inline: "sudo pip install -r requirements.txt --upgrade"

end

diff --git a/plugins b/plugins

new file mode 160000

index 000000000..689d9309f

--- /dev/null

+++ b/plugins

@@ -0,0 +1 @@

+Subproject commit 689d9309f73371f4681191b125ec3f2e14075eeb

diff --git a/plugins/AnnounceBitTorrent/AnnounceBitTorrentPlugin.py b/plugins/AnnounceBitTorrent/AnnounceBitTorrentPlugin.py

deleted file mode 100644

index fab7bb1ff..000000000

--- a/plugins/AnnounceBitTorrent/AnnounceBitTorrentPlugin.py

+++ /dev/null

@@ -1,148 +0,0 @@

-import time

-import urllib.request

-import struct

-import socket

-

-import lib.bencode_open as bencode_open

-from lib.subtl.subtl import UdpTrackerClient

-import socks

-import sockshandler

-import gevent

-

-from Plugin import PluginManager

-from Config import config

-from Debug import Debug

-from util import helper

-

-

-# We can only import plugin host clases after the plugins are loaded

-@PluginManager.afterLoad

-def importHostClasses():

- global Peer, AnnounceError

- from Peer import Peer

- from Site.SiteAnnouncer import AnnounceError

-

-

-@PluginManager.registerTo("SiteAnnouncer")

-class SiteAnnouncerPlugin(object):

- def getSupportedTrackers(self):

- trackers = super(SiteAnnouncerPlugin, self).getSupportedTrackers()

- if config.disable_udp or config.trackers_proxy != "disable":

- trackers = [tracker for tracker in trackers if not tracker.startswith("udp://")]

-

- return trackers

-

- def getTrackerHandler(self, protocol):

- if protocol == "udp":

- handler = self.announceTrackerUdp

- elif protocol == "http":

- handler = self.announceTrackerHttp

- elif protocol == "https":

- handler = self.announceTrackerHttps

- else:

- handler = super(SiteAnnouncerPlugin, self).getTrackerHandler(protocol)

- return handler

-

- def announceTrackerUdp(self, tracker_address, mode="start", num_want=10):

- s = time.time()

- if config.disable_udp:

- raise AnnounceError("Udp disabled by config")

- if config.trackers_proxy != "disable":

- raise AnnounceError("Udp trackers not available with proxies")

-

- ip, port = tracker_address.split("/")[0].split(":")

- tracker = UdpTrackerClient(ip, int(port))

- if helper.getIpType(ip) in self.getOpenedServiceTypes():

- tracker.peer_port = self.fileserver_port

- else:

- tracker.peer_port = 0

- tracker.connect()

- if not tracker.poll_once():

- raise AnnounceError("Could not connect")

- tracker.announce(info_hash=self.site.address_sha1, num_want=num_want, left=431102370)

- back = tracker.poll_once()

- if not back:

- raise AnnounceError("No response after %.0fs" % (time.time() - s))

- elif type(back) is dict and "response" in back:

- peers = back["response"]["peers"]

- else:

- raise AnnounceError("Invalid response: %r" % back)

-

- return peers

-

- def httpRequest(self, url):

- headers = {

- 'User-Agent': 'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.11 (KHTML, like Gecko) Chrome/23.0.1271.64 Safari/537.11',

- 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

- 'Accept-Charset': 'ISO-8859-1,utf-8;q=0.7,*;q=0.3',

- 'Accept-Encoding': 'none',

- 'Accept-Language': 'en-US,en;q=0.8',

- 'Connection': 'keep-alive'

- }

-

- req = urllib.request.Request(url, headers=headers)

-

- if config.trackers_proxy == "tor":

- tor_manager = self.site.connection_server.tor_manager

- handler = sockshandler.SocksiPyHandler(socks.SOCKS5, tor_manager.proxy_ip, tor_manager.proxy_port)

- opener = urllib.request.build_opener(handler)

- return opener.open(req, timeout=50)

- elif config.trackers_proxy == "disable":

- return urllib.request.urlopen(req, timeout=25)

- else:

- proxy_ip, proxy_port = config.trackers_proxy.split(":")

- handler = sockshandler.SocksiPyHandler(socks.SOCKS5, proxy_ip, int(proxy_port))

- opener = urllib.request.build_opener(handler)

- return opener.open(req, timeout=50)

-

- def announceTrackerHttps(self, *args, **kwargs):

- kwargs["protocol"] = "https"

- return self.announceTrackerHttp(*args, **kwargs)

-

- def announceTrackerHttp(self, tracker_address, mode="start", num_want=10, protocol="http"):

- tracker_ip, tracker_port = tracker_address.rsplit(":", 1)

- if helper.getIpType(tracker_ip) in self.getOpenedServiceTypes():

- port = self.fileserver_port

- else:

- port = 1

- params = {

- 'info_hash': self.site.address_sha1,

- 'peer_id': self.peer_id, 'port': port,

- 'uploaded': 0, 'downloaded': 0, 'left': 431102370, 'compact': 1, 'numwant': num_want,

- 'event': 'started'

- }

-

- url = protocol + "://" + tracker_address + "?" + urllib.parse.urlencode(params)

-

- s = time.time()

- response = None

- # Load url

- if config.tor == "always" or config.trackers_proxy != "disable":

- timeout = 60

- else:

- timeout = 30

-

- with gevent.Timeout(timeout, False): # Make sure of timeout

- req = self.httpRequest(url)

- response = req.read()

- req.close()

- req = None

-

- if not response:

- raise AnnounceError("No response after %.0fs" % (time.time() - s))

-

- # Decode peers

- try:

- peer_data = bencode_open.loads(response)[b"peers"]

- response = None

- peer_count = int(len(peer_data) / 6)

- peers = []

- for peer_offset in range(peer_count):

- off = 6 * peer_offset

- peer = peer_data[off:off + 6]

- addr, port = struct.unpack('!LH', peer)

- peers.append({"addr": socket.inet_ntoa(struct.pack('!L', addr)), "port": port})

- except Exception as err:

- raise AnnounceError("Invalid response: %r (%s)" % (response, Debug.formatException(err)))

-

- return peers

diff --git a/plugins/AnnounceBitTorrent/__init__.py b/plugins/AnnounceBitTorrent/__init__.py

deleted file mode 100644

index c74228554..000000000

--- a/plugins/AnnounceBitTorrent/__init__.py

+++ /dev/null

@@ -1 +0,0 @@

-from . import AnnounceBitTorrentPlugin

\ No newline at end of file

diff --git a/plugins/AnnounceBitTorrent/plugin_info.json b/plugins/AnnounceBitTorrent/plugin_info.json

deleted file mode 100644

index 824749ee4..000000000

--- a/plugins/AnnounceBitTorrent/plugin_info.json

+++ /dev/null

@@ -1,5 +0,0 @@

-{

- "name": "AnnounceBitTorrent",

- "description": "Discover new peers using BitTorrent trackers.",

- "default": "enabled"

-}

\ No newline at end of file

diff --git a/plugins/AnnounceLocal/AnnounceLocalPlugin.py b/plugins/AnnounceLocal/AnnounceLocalPlugin.py

deleted file mode 100644

index b92259665..000000000

--- a/plugins/AnnounceLocal/AnnounceLocalPlugin.py

+++ /dev/null

@@ -1,147 +0,0 @@

-import time

-

-import gevent

-

-from Plugin import PluginManager

-from Config import config

-from . import BroadcastServer

-

-

-@PluginManager.registerTo("SiteAnnouncer")

-class SiteAnnouncerPlugin(object):

- def announce(self, force=False, *args, **kwargs):

- local_announcer = self.site.connection_server.local_announcer

-

- thread = None

- if local_announcer and (force or time.time() - local_announcer.last_discover > 5 * 60):

- thread = gevent.spawn(local_announcer.discover, force=force)

- back = super(SiteAnnouncerPlugin, self).announce(force=force, *args, **kwargs)

-

- if thread:

- thread.join()

-

- return back

-

-

-class LocalAnnouncer(BroadcastServer.BroadcastServer):

- def __init__(self, server, listen_port):

- super(LocalAnnouncer, self).__init__("zeronet", listen_port=listen_port)

- self.server = server

-

- self.sender_info["peer_id"] = self.server.peer_id

- self.sender_info["port"] = self.server.port

- self.sender_info["broadcast_port"] = listen_port

- self.sender_info["rev"] = config.rev

-

- self.known_peers = {}

- self.last_discover = 0

-

- def discover(self, force=False):

- self.log.debug("Sending discover request (force: %s)" % force)

- self.last_discover = time.time()

- if force: # Probably new site added, clean cache

- self.known_peers = {}

-

- for peer_id, known_peer in list(self.known_peers.items()):

- if time.time() - known_peer["found"] > 20 * 60:

- del(self.known_peers[peer_id])

- self.log.debug("Timeout, removing from known_peers: %s" % peer_id)

- self.broadcast({"cmd": "discoverRequest", "params": {}}, port=self.listen_port)

-

- def actionDiscoverRequest(self, sender, params):

- back = {

- "cmd": "discoverResponse",

- "params": {

- "sites_changed": self.server.site_manager.sites_changed

- }

- }

-

- if sender["peer_id"] not in self.known_peers:

- self.known_peers[sender["peer_id"]] = {"added": time.time(), "sites_changed": 0, "updated": 0, "found": time.time()}

- self.log.debug("Got discover request from unknown peer %s (%s), time to refresh known peers" % (sender["ip"], sender["peer_id"]))

- gevent.spawn_later(1.0, self.discover) # Let the response arrive first to the requester

-

- return back

-

- def actionDiscoverResponse(self, sender, params):

- if sender["peer_id"] in self.known_peers:

- self.known_peers[sender["peer_id"]]["found"] = time.time()

- if params["sites_changed"] != self.known_peers.get(sender["peer_id"], {}).get("sites_changed"):

- # Peer's site list changed, request the list of new sites

- return {"cmd": "siteListRequest"}

- else:

- # Peer's site list is the same

- for site in self.server.sites.values():

- peer = site.peers.get("%s:%s" % (sender["ip"], sender["port"]))

- if peer:

- peer.found("local")

-

- def actionSiteListRequest(self, sender, params):

- back = []

- sites = list(self.server.sites.values())

-

- # Split adresses to group of 100 to avoid UDP size limit

- site_groups = [sites[i:i + 100] for i in range(0, len(sites), 100)]

- for site_group in site_groups:

- res = {}

- res["sites_changed"] = self.server.site_manager.sites_changed

- res["sites"] = [site.address_hash for site in site_group]

- back.append({"cmd": "siteListResponse", "params": res})

- return back

-

- def actionSiteListResponse(self, sender, params):

- s = time.time()

- peer_sites = set(params["sites"])

- num_found = 0

- added_sites = []

- for site in self.server.sites.values():

- if site.address_hash in peer_sites:

- added = site.addPeer(sender["ip"], sender["port"], source="local")

- num_found += 1

- if added:

- site.worker_manager.onPeers()

- site.updateWebsocket(peers_added=1)

- added_sites.append(site)

-

- # Save sites changed value to avoid unnecessary site list download

- if sender["peer_id"] not in self.known_peers:

- self.known_peers[sender["peer_id"]] = {"added": time.time()}

-

- self.known_peers[sender["peer_id"]]["sites_changed"] = params["sites_changed"]

- self.known_peers[sender["peer_id"]]["updated"] = time.time()

- self.known_peers[sender["peer_id"]]["found"] = time.time()

-

- self.log.debug(

- "Tracker result: Discover from %s response parsed in %.3fs, found: %s added: %s of %s" %

- (sender["ip"], time.time() - s, num_found, added_sites, len(peer_sites))

- )

-

-

-@PluginManager.registerTo("FileServer")

-class FileServerPlugin(object):

- def __init__(self, *args, **kwargs):

- super(FileServerPlugin, self).__init__(*args, **kwargs)

- if config.broadcast_port and config.tor != "always" and not config.disable_udp:

- self.local_announcer = LocalAnnouncer(self, config.broadcast_port)

- else:

- self.local_announcer = None

-

- def start(self, *args, **kwargs):

- if self.local_announcer:

- gevent.spawn(self.local_announcer.start)

- return super(FileServerPlugin, self).start(*args, **kwargs)

-

- def stop(self):

- if self.local_announcer:

- self.local_announcer.stop()

- res = super(FileServerPlugin, self).stop()

- return res

-

-

-@PluginManager.registerTo("ConfigPlugin")

-class ConfigPlugin(object):

- def createArguments(self):

- group = self.parser.add_argument_group("AnnounceLocal plugin")

- group.add_argument('--broadcast_port', help='UDP broadcasting port for local peer discovery', default=1544, type=int, metavar='port')

-

- return super(ConfigPlugin, self).createArguments()

diff --git a/plugins/AnnounceLocal/BroadcastServer.py b/plugins/AnnounceLocal/BroadcastServer.py

deleted file mode 100644

index 746788965..000000000

--- a/plugins/AnnounceLocal/BroadcastServer.py

+++ /dev/null

@@ -1,139 +0,0 @@

-import socket

-import logging

-import time

-from contextlib import closing

-

-from Debug import Debug

-from util import UpnpPunch

-from util import Msgpack

-

-

-class BroadcastServer(object):

- def __init__(self, service_name, listen_port=1544, listen_ip=''):

- self.log = logging.getLogger("BroadcastServer")

- self.listen_port = listen_port

- self.listen_ip = listen_ip

-

- self.running = False

- self.sock = None

- self.sender_info = {"service": service_name}

-

- def createBroadcastSocket(self):

- sock = socket.socket(socket.AF_INET, socket.SOCK_DGRAM)

- sock.setsockopt(socket.SOL_SOCKET, socket.SO_BROADCAST, 1)

- sock.setsockopt(socket.SOL_SOCKET, socket.SO_REUSEADDR, 1)

- if hasattr(socket, 'SO_REUSEPORT'):

- try:

- sock.setsockopt(socket.SOL_SOCKET, socket.SO_REUSEPORT, 1)

- except Exception as err:

- self.log.warning("Error setting SO_REUSEPORT: %s" % err)

-

- binded = False

- for retry in range(3):

- try:

- sock.bind((self.listen_ip, self.listen_port))

- binded = True

- break

- except Exception as err:

- self.log.error(

- "Socket bind to %s:%s error: %s, retry #%s" %

- (self.listen_ip, self.listen_port, Debug.formatException(err), retry)

- )

- time.sleep(retry)

-

- if binded:

- return sock

- else:

- return False

-

- def start(self): # Listens for discover requests

- self.sock = self.createBroadcastSocket()

- if not self.sock:

- self.log.error("Unable to listen on port %s" % self.listen_port)

- return

-

- self.log.debug("Started on port %s" % self.listen_port)

-

- self.running = True

-

- while self.running:

- try:

- data, addr = self.sock.recvfrom(8192)

- except Exception as err:

- if self.running:

- self.log.error("Listener receive error: %s" % err)

- continue

-

- if not self.running:

- break

-

- try:

- message = Msgpack.unpack(data)

- response_addr, message = self.handleMessage(addr, message)

- if message:

- self.send(response_addr, message)

- except Exception as err:

- self.log.error("Handlemessage error: %s" % Debug.formatException(err))

- self.log.debug("Stopped listening on port %s" % self.listen_port)

-

- def stop(self):

- self.log.debug("Stopping, socket: %s" % self.sock)

- self.running = False

- if self.sock:

- self.sock.close()

-

- def send(self, addr, message):

- if type(message) is not list:

- message = [message]

-

- for message_part in message:

- message_part["sender"] = self.sender_info

-

- self.log.debug("Send to %s: %s" % (addr, message_part["cmd"]))

- with closing(socket.socket(socket.AF_INET, socket.SOCK_DGRAM)) as sock:

- sock = socket.socket(socket.AF_INET, socket.SOCK_DGRAM)

- sock.sendto(Msgpack.pack(message_part), addr)

-

- def getMyIps(self):

- return UpnpPunch._get_local_ips()

-

- def broadcast(self, message, port=None):

- if not port:

- port = self.listen_port

-

- my_ips = self.getMyIps()

- addr = ("255.255.255.255", port)

-

- message["sender"] = self.sender_info

- self.log.debug("Broadcast using ips %s on port %s: %s" % (my_ips, port, message["cmd"]))

-

- for my_ip in my_ips:

- try:

- with closing(socket.socket(socket.AF_INET, socket.SOCK_DGRAM)) as sock:

- sock = socket.socket(socket.AF_INET, socket.SOCK_DGRAM)

- sock.setsockopt(socket.SOL_SOCKET, socket.SO_BROADCAST, 1)

- sock.bind((my_ip, 0))

- sock.sendto(Msgpack.pack(message), addr)

- except Exception as err:

- self.log.warning("Error sending broadcast using ip %s: %s" % (my_ip, err))

-

- def handleMessage(self, addr, message):

- self.log.debug("Got from %s: %s" % (addr, message["cmd"]))

- cmd = message["cmd"]

- params = message.get("params", {})

- sender = message["sender"]

- sender["ip"] = addr[0]

-

- func_name = "action" + cmd[0].upper() + cmd[1:]

- func = getattr(self, func_name, None)

-

- if sender["service"] != "zeronet" or sender["peer_id"] == self.sender_info["peer_id"]:

- # Skip messages not for us or sent by us

- message = None

- elif func:

- message = func(sender, params)

- else:

- self.log.debug("Unknown cmd: %s" % cmd)

- message = None

-

- return (sender["ip"], sender["broadcast_port"]), message

diff --git a/plugins/AnnounceLocal/Test/TestAnnounce.py b/plugins/AnnounceLocal/Test/TestAnnounce.py

deleted file mode 100644

index 4def02edb..000000000

--- a/plugins/AnnounceLocal/Test/TestAnnounce.py

+++ /dev/null

@@ -1,113 +0,0 @@

-import time

-import copy

-

-import gevent

-import pytest

-import mock

-

-from AnnounceLocal import AnnounceLocalPlugin

-from File import FileServer

-from Test import Spy

-

-@pytest.fixture

-def announcer(file_server, site):

- file_server.sites[site.address] = site

- announcer = AnnounceLocalPlugin.LocalAnnouncer(file_server, listen_port=1100)

- file_server.local_announcer = announcer

- announcer.listen_port = 1100

- announcer.sender_info["broadcast_port"] = 1100

- announcer.getMyIps = mock.MagicMock(return_value=["127.0.0.1"])

- announcer.discover = mock.MagicMock(return_value=False) # Don't send discover requests automatically

- gevent.spawn(announcer.start)

- time.sleep(0.5)

-

- assert file_server.local_announcer.running

- return file_server.local_announcer

-

-@pytest.fixture

-def announcer_remote(request, site_temp):

- file_server_remote = FileServer("127.0.0.1", 1545)

- file_server_remote.sites[site_temp.address] = site_temp

- announcer = AnnounceLocalPlugin.LocalAnnouncer(file_server_remote, listen_port=1101)

- file_server_remote.local_announcer = announcer

- announcer.listen_port = 1101

- announcer.sender_info["broadcast_port"] = 1101

- announcer.getMyIps = mock.MagicMock(return_value=["127.0.0.1"])

- announcer.discover = mock.MagicMock(return_value=False) # Don't send discover requests automatically

- gevent.spawn(announcer.start)

- time.sleep(0.5)

-

- assert file_server_remote.local_announcer.running

-

- def cleanup():

- file_server_remote.stop()

- request.addfinalizer(cleanup)

-

-

- return file_server_remote.local_announcer

-

-@pytest.mark.usefixtures("resetSettings")

-@pytest.mark.usefixtures("resetTempSettings")

-class TestAnnounce:

- def testSenderInfo(self, announcer):

- sender_info = announcer.sender_info

- assert sender_info["port"] > 0

- assert len(sender_info["peer_id"]) == 20

- assert sender_info["rev"] > 0

-

- def testIgnoreSelfMessages(self, announcer):

- # No response to messages that has same peer_id as server

- assert not announcer.handleMessage(("0.0.0.0", 123), {"cmd": "discoverRequest", "sender": announcer.sender_info, "params": {}})[1]

-

- # Response to messages with different peer id

- sender_info = copy.copy(announcer.sender_info)

- sender_info["peer_id"] += "-"

- addr, res = announcer.handleMessage(("0.0.0.0", 123), {"cmd": "discoverRequest", "sender": sender_info, "params": {}})

- assert res["params"]["sites_changed"] > 0

-

- def testDiscoverRequest(self, announcer, announcer_remote):

- assert len(announcer_remote.known_peers) == 0

- with Spy.Spy(announcer_remote, "handleMessage") as responses:

- announcer_remote.broadcast({"cmd": "discoverRequest", "params": {}}, port=announcer.listen_port)

- time.sleep(0.1)

-

- response_cmds = [response[1]["cmd"] for response in responses]

- assert response_cmds == ["discoverResponse", "siteListResponse"]

- assert len(responses[-1][1]["params"]["sites"]) == 1

-

- # It should only request siteList if sites_changed value is different from last response

- with Spy.Spy(announcer_remote, "handleMessage") as responses:

- announcer_remote.broadcast({"cmd": "discoverRequest", "params": {}}, port=announcer.listen_port)

- time.sleep(0.1)

-

- response_cmds = [response[1]["cmd"] for response in responses]

- assert response_cmds == ["discoverResponse"]

-

- def testPeerDiscover(self, announcer, announcer_remote, site):

- assert announcer.server.peer_id != announcer_remote.server.peer_id

- assert len(list(announcer.server.sites.values())[0].peers) == 0

- announcer.broadcast({"cmd": "discoverRequest"}, port=announcer_remote.listen_port)

- time.sleep(0.1)

- assert len(list(announcer.server.sites.values())[0].peers) == 1

-

- def testRecentPeerList(self, announcer, announcer_remote, site):

- assert len(site.peers_recent) == 0

- assert len(site.peers) == 0

- with Spy.Spy(announcer, "handleMessage") as responses:

- announcer.broadcast({"cmd": "discoverRequest", "params": {}}, port=announcer_remote.listen_port)

- time.sleep(0.1)

- assert [response[1]["cmd"] for response in responses] == ["discoverResponse", "siteListResponse"]

- assert len(site.peers_recent) == 1

- assert len(site.peers) == 1

-

- # It should update peer without siteListResponse

- last_time_found = list(site.peers.values())[0].time_found

- site.peers_recent.clear()

- with Spy.Spy(announcer, "handleMessage") as responses:

- announcer.broadcast({"cmd": "discoverRequest", "params": {}}, port=announcer_remote.listen_port)

- time.sleep(0.1)

- assert [response[1]["cmd"] for response in responses] == ["discoverResponse"]

- assert len(site.peers_recent) == 1

- assert list(site.peers.values())[0].time_found > last_time_found

-

-

diff --git a/plugins/AnnounceLocal/Test/conftest.py b/plugins/AnnounceLocal/Test/conftest.py

deleted file mode 100644

index a88c642c7..000000000

--- a/plugins/AnnounceLocal/Test/conftest.py

+++ /dev/null

@@ -1,4 +0,0 @@

-from src.Test.conftest import *

-

-from Config import config

-config.broadcast_port = 0

diff --git a/plugins/AnnounceLocal/Test/pytest.ini b/plugins/AnnounceLocal/Test/pytest.ini

deleted file mode 100644

index d09210d1d..000000000

--- a/plugins/AnnounceLocal/Test/pytest.ini

+++ /dev/null

@@ -1,5 +0,0 @@

-[pytest]

-python_files = Test*.py

-addopts = -rsxX -v --durations=6

-markers =

- webtest: mark a test as a webtest.

\ No newline at end of file

diff --git a/plugins/AnnounceLocal/__init__.py b/plugins/AnnounceLocal/__init__.py

deleted file mode 100644

index 5b80abd2b..000000000

--- a/plugins/AnnounceLocal/__init__.py

+++ /dev/null

@@ -1 +0,0 @@

-from . import AnnounceLocalPlugin

\ No newline at end of file

diff --git a/plugins/AnnounceLocal/plugin_info.json b/plugins/AnnounceLocal/plugin_info.json

deleted file mode 100644

index 2908cbf15..000000000

--- a/plugins/AnnounceLocal/plugin_info.json

+++ /dev/null

@@ -1,5 +0,0 @@

-{

- "name": "AnnounceLocal",

- "description": "Discover LAN clients using UDP broadcasting.",

- "default": "enabled"

-}

\ No newline at end of file

diff --git a/plugins/AnnounceShare/AnnounceSharePlugin.py b/plugins/AnnounceShare/AnnounceSharePlugin.py

deleted file mode 100644

index 057ce55a1..000000000

--- a/plugins/AnnounceShare/AnnounceSharePlugin.py

+++ /dev/null

@@ -1,190 +0,0 @@

-import time

-import os

-import logging

-import json

-import atexit

-

-import gevent

-

-from Config import config

-from Plugin import PluginManager

-from util import helper

-

-

-class TrackerStorage(object):

- def __init__(self):

- self.log = logging.getLogger("TrackerStorage")

- self.file_path = "%s/trackers.json" % config.data_dir

- self.load()

- self.time_discover = 0.0

- atexit.register(self.save)

-

- def getDefaultFile(self):

- return {"shared": {}}

-

- def onTrackerFound(self, tracker_address, type="shared", my=False):

- if not tracker_address.startswith("zero://"):

- return False

-

- trackers = self.getTrackers()

- added = False

- if tracker_address not in trackers:

- trackers[tracker_address] = {

- "time_added": time.time(),

- "time_success": 0,

- "latency": 99.0,

- "num_error": 0,

- "my": False

- }

- self.log.debug("New tracker found: %s" % tracker_address)

- added = True

-

- trackers[tracker_address]["time_found"] = time.time()

- trackers[tracker_address]["my"] = my

- return added

-

- def onTrackerSuccess(self, tracker_address, latency):

- trackers = self.getTrackers()

- if tracker_address not in trackers:

- return False

-

- trackers[tracker_address]["latency"] = latency

- trackers[tracker_address]["time_success"] = time.time()

- trackers[tracker_address]["num_error"] = 0

-

- def onTrackerError(self, tracker_address):

- trackers = self.getTrackers()

- if tracker_address not in trackers:

- return False

-

- trackers[tracker_address]["time_error"] = time.time()

- trackers[tracker_address]["num_error"] += 1

-

- if len(self.getWorkingTrackers()) >= config.working_shared_trackers_limit:

- error_limit = 5

- else:

- error_limit = 30

- error_limit

-

- if trackers[tracker_address]["num_error"] > error_limit and trackers[tracker_address]["time_success"] < time.time() - 60 * 60:

- self.log.debug("Tracker %s looks down, removing." % tracker_address)

- del trackers[tracker_address]

-

- def getTrackers(self, type="shared"):

- return self.file_content.setdefault(type, {})

-

- def getWorkingTrackers(self, type="shared"):

- trackers = {

- key: tracker for key, tracker in self.getTrackers(type).items()

- if tracker["time_success"] > time.time() - 60 * 60

- }

- return trackers

-

- def getFileContent(self):

- if not os.path.isfile(self.file_path):

- open(self.file_path, "w").write("{}")

- return self.getDefaultFile()

- try:

- return json.load(open(self.file_path))

- except Exception as err:

- self.log.error("Error loading trackers list: %s" % err)

- return self.getDefaultFile()

-

- def load(self):

- self.file_content = self.getFileContent()

-

- trackers = self.getTrackers()

- self.log.debug("Loaded %s shared trackers" % len(trackers))

- for address, tracker in list(trackers.items()):

- tracker["num_error"] = 0

- if not address.startswith("zero://"):

- del trackers[address]

-

- def save(self):

- s = time.time()

- helper.atomicWrite(self.file_path, json.dumps(self.file_content, indent=2, sort_keys=True).encode("utf8"))

- self.log.debug("Saved in %.3fs" % (time.time() - s))

-

- def discoverTrackers(self, peers):

- if len(self.getWorkingTrackers()) > config.working_shared_trackers_limit:

- return False

- s = time.time()

- num_success = 0

- for peer in peers:

- if peer.connection and peer.connection.handshake.get("rev", 0) < 3560:

- continue # Not supported

-

- res = peer.request("getTrackers")

- if not res or "error" in res:

- continue

-

- num_success += 1

- for tracker_address in res["trackers"]:

- if type(tracker_address) is bytes: # Backward compatibilitys

- tracker_address = tracker_address.decode("utf8")

- added = self.onTrackerFound(tracker_address)

- if added: # Only add one tracker from one source

- break

-

- if not num_success and len(peers) < 20:

- self.time_discover = 0.0

-

- if num_success:

- self.save()

-

- self.log.debug("Trackers discovered from %s/%s peers in %.3fs" % (num_success, len(peers), time.time() - s))

-

-

-if "tracker_storage" not in locals():

- tracker_storage = TrackerStorage()

-

-

-@PluginManager.registerTo("SiteAnnouncer")

-class SiteAnnouncerPlugin(object):

- def getTrackers(self):

- if tracker_storage.time_discover < time.time() - 5 * 60:

- tracker_storage.time_discover = time.time()

- gevent.spawn(tracker_storage.discoverTrackers, self.site.getConnectedPeers())

- trackers = super(SiteAnnouncerPlugin, self).getTrackers()

- shared_trackers = list(tracker_storage.getTrackers("shared").keys())

- if shared_trackers:

- return trackers + shared_trackers

- else:

- return trackers

-

- def announceTracker(self, tracker, *args, **kwargs):

- res = super(SiteAnnouncerPlugin, self).announceTracker(tracker, *args, **kwargs)

- if res:

- latency = res

- tracker_storage.onTrackerSuccess(tracker, latency)

- elif res is False:

- tracker_storage.onTrackerError(tracker)

-

- return res

-

-

-@PluginManager.registerTo("FileRequest")

-class FileRequestPlugin(object):

- def actionGetTrackers(self, params):

- shared_trackers = list(tracker_storage.getWorkingTrackers("shared").keys())

- self.response({"trackers": shared_trackers})

-

-

-@PluginManager.registerTo("FileServer")

-class FileServerPlugin(object):

- def portCheck(self, *args, **kwargs):

- res = super(FileServerPlugin, self).portCheck(*args, **kwargs)

- if res and not config.tor == "always" and "Bootstrapper" in PluginManager.plugin_manager.plugin_names:

- for ip in self.ip_external_list:

- my_tracker_address = "zero://%s:%s" % (ip, config.fileserver_port)

- tracker_storage.onTrackerFound(my_tracker_address, my=True)

- return res

-

-

-@PluginManager.registerTo("ConfigPlugin")

-class ConfigPlugin(object):

- def createArguments(self):

- group = self.parser.add_argument_group("AnnounceShare plugin")

- group.add_argument('--working_shared_trackers_limit', help='Stop discovering new shared trackers after this number of shared trackers reached', default=5, type=int, metavar='limit')

-

- return super(ConfigPlugin, self).createArguments()

diff --git a/plugins/AnnounceShare/Test/TestAnnounceShare.py b/plugins/AnnounceShare/Test/TestAnnounceShare.py

deleted file mode 100644

index 7178eac88..000000000

--- a/plugins/AnnounceShare/Test/TestAnnounceShare.py

+++ /dev/null

@@ -1,24 +0,0 @@

-import pytest

-

-from AnnounceShare import AnnounceSharePlugin

-from Peer import Peer

-from Config import config

-

-

-@pytest.mark.usefixtures("resetSettings")

-@pytest.mark.usefixtures("resetTempSettings")

-class TestAnnounceShare:

- def testAnnounceList(self, file_server):

- open("%s/trackers.json" % config.data_dir, "w").write("{}")

- tracker_storage = AnnounceSharePlugin.tracker_storage

- tracker_storage.load()

- peer = Peer(file_server.ip, 1544, connection_server=file_server)

- assert peer.request("getTrackers")["trackers"] == []

-

- tracker_storage.onTrackerFound("zero://%s:15441" % file_server.ip)

- assert peer.request("getTrackers")["trackers"] == []

-

- # It needs to have at least one successfull announce to be shared to other peers

- tracker_storage.onTrackerSuccess("zero://%s:15441" % file_server.ip, 1.0)

- assert peer.request("getTrackers")["trackers"] == ["zero://%s:15441" % file_server.ip]

-

diff --git a/plugins/AnnounceShare/Test/conftest.py b/plugins/AnnounceShare/Test/conftest.py

deleted file mode 100644

index 5abd4dd68..000000000

--- a/plugins/AnnounceShare/Test/conftest.py

+++ /dev/null

@@ -1,3 +0,0 @@

-from src.Test.conftest import *

-

-from Config import config

diff --git a/plugins/AnnounceShare/Test/pytest.ini b/plugins/AnnounceShare/Test/pytest.ini

deleted file mode 100644

index d09210d1d..000000000

--- a/plugins/AnnounceShare/Test/pytest.ini

+++ /dev/null

@@ -1,5 +0,0 @@

-[pytest]

-python_files = Test*.py

-addopts = -rsxX -v --durations=6

-markers =

- webtest: mark a test as a webtest.

\ No newline at end of file

diff --git a/plugins/AnnounceShare/__init__.py b/plugins/AnnounceShare/__init__.py

deleted file mode 100644

index dc1e40bd7..000000000

--- a/plugins/AnnounceShare/__init__.py

+++ /dev/null

@@ -1 +0,0 @@

-from . import AnnounceSharePlugin

diff --git a/plugins/AnnounceShare/plugin_info.json b/plugins/AnnounceShare/plugin_info.json

deleted file mode 100644

index 0ad07e714..000000000

--- a/plugins/AnnounceShare/plugin_info.json

+++ /dev/null

@@ -1,5 +0,0 @@

-{

- "name": "AnnounceShare",

- "description": "Share possible trackers between clients.",

- "default": "enabled"

-}

\ No newline at end of file

diff --git a/plugins/AnnounceZero/AnnounceZeroPlugin.py b/plugins/AnnounceZero/AnnounceZeroPlugin.py

deleted file mode 100644

index 623cd4b5e..000000000

--- a/plugins/AnnounceZero/AnnounceZeroPlugin.py

+++ /dev/null

@@ -1,140 +0,0 @@

-import time

-import itertools

-

-from Plugin import PluginManager

-from util import helper

-from Crypt import CryptRsa

-

-allow_reload = False # No source reload supported in this plugin

-time_full_announced = {} # Tracker address: Last announced all site to tracker

-connection_pool = {} # Tracker address: Peer object

-

-

-# We can only import plugin host clases after the plugins are loaded

-@PluginManager.afterLoad

-def importHostClasses():

- global Peer, AnnounceError

- from Peer import Peer

- from Site.SiteAnnouncer import AnnounceError

-

-

-# Process result got back from tracker

-def processPeerRes(tracker_address, site, peers):

- added = 0

-

- # Onion

- found_onion = 0

- for packed_address in peers["onion"]:

- found_onion += 1

- peer_onion, peer_port = helper.unpackOnionAddress(packed_address)

- if site.addPeer(peer_onion, peer_port, source="tracker"):

- added += 1

-

- # Ip4

- found_ipv4 = 0

- peers_normal = itertools.chain(peers.get("ip4", []), peers.get("ipv4", []), peers.get("ipv6", []))

- for packed_address in peers_normal:

- found_ipv4 += 1

- peer_ip, peer_port = helper.unpackAddress(packed_address)

- if site.addPeer(peer_ip, peer_port, source="tracker"):

- added += 1

-

- if added:

- site.worker_manager.onPeers()

- site.updateWebsocket(peers_added=added)

- return added

-

-

-@PluginManager.registerTo("SiteAnnouncer")

-class SiteAnnouncerPlugin(object):

- def getTrackerHandler(self, protocol):

- if protocol == "zero":

- return self.announceTrackerZero

- else:

- return super(SiteAnnouncerPlugin, self).getTrackerHandler(protocol)

-

- def announceTrackerZero(self, tracker_address, mode="start", num_want=10):

- global time_full_announced

- s = time.time()

-

- need_types = ["ip4"] # ip4 for backward compatibility reasons

- need_types += self.site.connection_server.supported_ip_types

- if self.site.connection_server.tor_manager.enabled:

- need_types.append("onion")

-

- if mode == "start" or mode == "more": # Single: Announce only this site

- sites = [self.site]

- full_announce = False

- else: # Multi: Announce all currently serving site

- full_announce = True

- if time.time() - time_full_announced.get(tracker_address, 0) < 60 * 15: # No reannounce all sites within short time

- return None

- time_full_announced[tracker_address] = time.time()

- from Site import SiteManager

- sites = [site for site in SiteManager.site_manager.sites.values() if site.isServing()]

-

- # Create request

- add_types = self.getOpenedServiceTypes()

- request = {

- "hashes": [], "onions": [], "port": self.fileserver_port, "need_types": need_types, "need_num": 20, "add": add_types

- }

- for site in sites:

- if "onion" in add_types:

- onion = self.site.connection_server.tor_manager.getOnion(site.address)

- request["onions"].append(onion)

- request["hashes"].append(site.address_hash)

-

- # Tracker can remove sites that we don't announce

- if full_announce:

- request["delete"] = True

-

- # Sent request to tracker

- tracker_peer = connection_pool.get(tracker_address) # Re-use tracker connection if possible

- if not tracker_peer:

- tracker_ip, tracker_port = tracker_address.rsplit(":", 1)

- tracker_peer = Peer(str(tracker_ip), int(tracker_port), connection_server=self.site.connection_server)

- tracker_peer.is_tracker_connection = True

- connection_pool[tracker_address] = tracker_peer

-

- res = tracker_peer.request("announce", request)

-

- if not res or "peers" not in res:

- if full_announce:

- time_full_announced[tracker_address] = 0

- raise AnnounceError("Invalid response: %s" % res)

-

- # Add peers from response to site

- site_index = 0

- peers_added = 0

- for site_res in res["peers"]:

- site = sites[site_index]

- peers_added += processPeerRes(tracker_address, site, site_res)

- site_index += 1

-

- # Check if we need to sign prove the onion addresses

- if "onion_sign_this" in res:

- self.site.log.debug("Signing %s for %s to add %s onions" % (res["onion_sign_this"], tracker_address, len(sites)))

- request["onion_signs"] = {}

- request["onion_sign_this"] = res["onion_sign_this"]

- request["need_num"] = 0

- for site in sites:

- onion = self.site.connection_server.tor_manager.getOnion(site.address)

- publickey = self.site.connection_server.tor_manager.getPublickey(onion)

- if publickey not in request["onion_signs"]:

- sign = CryptRsa.sign(res["onion_sign_this"].encode("utf8"), self.site.connection_server.tor_manager.getPrivatekey(onion))

- request["onion_signs"][publickey] = sign

- res = tracker_peer.request("announce", request)

- if not res or "onion_sign_this" in res:

- if full_announce:

- time_full_announced[tracker_address] = 0

- raise AnnounceError("Announce onion address to failed: %s" % res)

-

- if full_announce:

- tracker_peer.remove() # Close connection, we don't need it in next 5 minute

-

- self.site.log.debug(

- "Tracker announce result: zero://%s (sites: %s, new peers: %s, add: %s, mode: %s) in %.3fs" %

- (tracker_address, site_index, peers_added, add_types, mode, time.time() - s)

- )

-

- return True

diff --git a/plugins/AnnounceZero/__init__.py b/plugins/AnnounceZero/__init__.py

deleted file mode 100644

index 8aec5ddb6..000000000

--- a/plugins/AnnounceZero/__init__.py

+++ /dev/null

@@ -1 +0,0 @@

-from . import AnnounceZeroPlugin

\ No newline at end of file

diff --git a/plugins/AnnounceZero/plugin_info.json b/plugins/AnnounceZero/plugin_info.json

deleted file mode 100644

index 50e7cf7fd..000000000

--- a/plugins/AnnounceZero/plugin_info.json

+++ /dev/null

@@ -1,5 +0,0 @@

-{

- "name": "AnnounceZero",

- "description": "Announce using ZeroNet protocol.",

- "default": "enabled"

-}

\ No newline at end of file

diff --git a/plugins/Benchmark/BenchmarkDb.py b/plugins/Benchmark/BenchmarkDb.py

deleted file mode 100644

index a767a3f47..000000000

--- a/plugins/Benchmark/BenchmarkDb.py

+++ /dev/null

@@ -1,143 +0,0 @@

-import os

-import json

-import contextlib

-import time

-

-from Plugin import PluginManager

-from Config import config

-

-

-@PluginManager.registerTo("Actions")

-class ActionsPlugin:

- def getBenchmarkTests(self, online=False):

- tests = super().getBenchmarkTests(online)

- tests.extend([

- {"func": self.testDbConnect, "num": 10, "time_standard": 0.27},

- {"func": self.testDbInsert, "num": 10, "time_standard": 0.91},

- {"func": self.testDbInsertMultiuser, "num": 1, "time_standard": 0.57},

- {"func": self.testDbQueryIndexed, "num": 1000, "time_standard": 0.84},

- {"func": self.testDbQueryNotIndexed, "num": 1000, "time_standard": 1.30}

- ])

- return tests

-

-

- @contextlib.contextmanager

- def getTestDb(self):

- from Db import Db

- path = "%s/benchmark.db" % config.data_dir

- if os.path.isfile(path):

- os.unlink(path)

- schema = {

- "db_name": "TestDb",

- "db_file": path,

- "maps": {

- ".*": {

- "to_table": {

- "test": "test"

- }

- }

- },

- "tables": {

- "test": {

- "cols": [

- ["test_id", "INTEGER"],

- ["title", "TEXT"],

- ["json_id", "INTEGER REFERENCES json (json_id)"]

- ],

- "indexes": ["CREATE UNIQUE INDEX test_key ON test(test_id, json_id)"],

- "schema_changed": 1426195822

- }

- }

- }

-

- db = Db.Db(schema, path)

-

- yield db

-

- db.close()

- if os.path.isfile(path):

- os.unlink(path)

-

- def testDbConnect(self, num_run=1):

- import sqlite3

- for i in range(num_run):

- with self.getTestDb() as db:

- db.checkTables()

- yield "."

- yield "(SQLite version: %s, API: %s)" % (sqlite3.sqlite_version, sqlite3.version)

-

- def testDbInsert(self, num_run=1):

- yield "x 1000 lines "

- for u in range(num_run):

- with self.getTestDb() as db:

- db.checkTables()

- data = {"test": []}

- for i in range(1000): # 1000 line of data

- data["test"].append({"test_id": i, "title": "Testdata for %s message %s" % (u, i)})

- json.dump(data, open("%s/test_%s.json" % (config.data_dir, u), "w"))

- db.updateJson("%s/test_%s.json" % (config.data_dir, u))

- os.unlink("%s/test_%s.json" % (config.data_dir, u))

- assert db.execute("SELECT COUNT(*) FROM test").fetchone()[0] == 1000

- yield "."

-

- def fillTestDb(self, db):

- db.checkTables()

- cur = db.getCursor()

- cur.logging = False

- for u in range(100, 200): # 100 user

- data = {"test": []}

- for i in range(100): # 1000 line of data

- data["test"].append({"test_id": i, "title": "Testdata for %s message %s" % (u, i)})

- json.dump(data, open("%s/test_%s.json" % (config.data_dir, u), "w"))

- db.updateJson("%s/test_%s.json" % (config.data_dir, u), cur=cur)

- os.unlink("%s/test_%s.json" % (config.data_dir, u))

- if u % 10 == 0:

- yield "."

-

- def testDbInsertMultiuser(self, num_run=1):

- yield "x 100 users x 100 lines "

- for u in range(num_run):

- with self.getTestDb() as db:

- for progress in self.fillTestDb(db):

- yield progress

- num_rows = db.execute("SELECT COUNT(*) FROM test").fetchone()[0]

- assert num_rows == 10000, "%s != 10000" % num_rows

-

- def testDbQueryIndexed(self, num_run=1):

- s = time.time()

- with self.getTestDb() as db:

- for progress in self.fillTestDb(db):

- pass

- yield " (Db warmup done in %.3fs) " % (time.time() - s)

- found_total = 0

- for i in range(num_run): # 1000x by test_id

- found = 0

- res = db.execute("SELECT * FROM test WHERE test_id = %s" % (i % 100))

- for row in res:

- found_total += 1

- found += 1

- del(res)

- yield "."

- assert found == 100, "%s != 100 (i: %s)" % (found, i)

- yield "Found: %s" % found_total

-

- def testDbQueryNotIndexed(self, num_run=1):

- s = time.time()

- with self.getTestDb() as db:

- for progress in self.fillTestDb(db):

- pass

- yield " (Db warmup done in %.3fs) " % (time.time() - s)

- found_total = 0

- for i in range(num_run): # 1000x by test_id

- found = 0

- res = db.execute("SELECT * FROM test WHERE json_id = %s" % i)

- for row in res:

- found_total += 1

- found += 1

- yield "."

- del(res)

- if i == 0 or i > 100:

- assert found == 0, "%s != 0 (i: %s)" % (found, i)

- else:

- assert found == 100, "%s != 100 (i: %s)" % (found, i)

- yield "Found: %s" % found_total

diff --git a/plugins/Benchmark/BenchmarkPack.py b/plugins/Benchmark/BenchmarkPack.py

deleted file mode 100644

index 6b92e43a0..000000000

--- a/plugins/Benchmark/BenchmarkPack.py

+++ /dev/null

@@ -1,183 +0,0 @@

-import os

-import io

-from collections import OrderedDict

-

-from Plugin import PluginManager

-from Config import config

-from util import Msgpack

-

-

-@PluginManager.registerTo("Actions")

-class ActionsPlugin:

- def createZipFile(self, path):

- import zipfile

- test_data = b"Test" * 1024

- file_name = b"\xc3\x81rv\xc3\xadzt\xc5\xb1r\xc5\x91%s.txt".decode("utf8")

- with zipfile.ZipFile(path, 'w') as archive:

- for y in range(100):

- zip_info = zipfile.ZipInfo(file_name % y, (1980, 1, 1, 0, 0, 0))

- zip_info.compress_type = zipfile.ZIP_DEFLATED

- zip_info.create_system = 3

- zip_info.flag_bits = 0

- zip_info.external_attr = 25165824

- archive.writestr(zip_info, test_data)

-

- def testPackZip(self, num_run=1):

- """

- Test zip file creating

- """

- yield "x 100 x 5KB "

- from Crypt import CryptHash

- zip_path = '%s/test.zip' % config.data_dir

- for i in range(num_run):

- self.createZipFile(zip_path)

- yield "."

-

- archive_size = os.path.getsize(zip_path) / 1024

- yield "(Generated file size: %.2fkB)" % archive_size

-

- hash = CryptHash.sha512sum(open(zip_path, "rb"))

- valid = "cb32fb43783a1c06a2170a6bc5bb228a032b67ff7a1fd7a5efb9b467b400f553"

- assert hash == valid, "Invalid hash: %s != %s

](https://play.google.com/store/apps/details?id=dev.zeronetx.app.lite)

+

+

#### Docker

-There is an official image, built from source at: https://hub.docker.com/r/nofish/zeronet/

+There is an official image, built from source at: https://hub.docker.com/r/canewsin/zeronet/

-### Install from source

+### Online Proxies

+Proxies are like seed boxes for sites(i.e ZNX runs on a cloud vps), you can try zeronet experience from proxies. Add your proxy below if you have one.

+

+

+

+#### From Community

+

+https://0net-preview.com/

+

+

+### Install from source

+ - `wget https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-src.zip`

+ - `sudo apt install unzip && unzip ZeroNet-src.zip`

+ - `cd ZeroNet`

- `sudo apt-get update`

- `sudo apt-get install python3-pip`

- `sudo python3 -m pip install -r requirements.txt`

+ - > Above command should output "Successfully installed {Package Names Here...}" without any errors, Incase of any errors try this command for required dependencies

+ >

+ > `sudo apt install git autoconf pkg-config libffi-dev python3-pip python3-venv python3-dev build-essential libtool`

+ >

+ > and rerun `sudo python3 -m pip install -r requirements.txt`

- Start with: `python3 zeronet.py`

- Open the ZeroHello landing page in your browser by navigating to: http://127.0.0.1:43110/

+

## Current limitations

-* ~~No torrent-like file splitting for big file support~~ (big file support added)

-* ~~No more anonymous than Bittorrent~~ (built-in full Tor support added)

-* File transactions are not compressed ~~or encrypted yet~~ (TLS encryption added)

+* File transactions are not compressed

* No private sites

-

+* ~~No more anonymous than Bittorrent~~ (built-in full Tor and I2P support added)

## How can I create a ZeroNet site?

- * Click on **⋮** > **"Create new, empty site"** menu item on the site [ZeroHello](http://127.0.0.1:43110/1HeLLo4uzjaLetFx6NH3PMwFP3qbRbTf3D).

+ * Click on **⋮** > **"Create new, empty site"** menu item on the site [ZeroHello](http://127.0.0.1:43110/1HELLoE3sFD9569CLCbHEAVqvqV7U2Ri9d).

* You will be **redirected** to a completely new site that is only modifiable by you!

* You can find and modify your site's content in **data/[yoursiteaddress]** directory

* After the modifications open your site, drag the topright "0" button to left, then press **sign** and **publish** buttons on the bottom

-Next steps: [ZeroNet Developer Documentation](https://zeronet.io/docs/site_development/getting_started/)

+Next steps: [ZeroNet Developer Documentation](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/site_development/getting_started/)

## Help keep this project alive

-

-- Bitcoin: 1QDhxQ6PraUZa21ET5fYUCPgdrwBomnFgX

-- Paypal: https://zeronet.io/docs/help_zeronet/donate/

-

-### Sponsors

-

-* Better macOS/Safari compatibility made possible by [BrowserStack.com](https://www.browserstack.com)

+- Bitcoin: 1ZeroNetyV5mKY9JF1gsm82TuBXHpfdLX (Preferred)

+- LiberaPay: https://liberapay.com/PramUkesh

+- Paypal: https://paypal.me/PramUkesh

+- Others: [Donate](!https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/help_zeronet/donate/#help-to-keep-zeronet-development-alive)

#### Thank you!

-* More info, help, changelog, zeronet sites: https://www.reddit.com/r/zeronet/

-* Come, chat with us: [#zeronet @ FreeNode](https://kiwiirc.com/client/irc.freenode.net/zeronet) or on [gitter](https://gitter.im/HelloZeroNet/ZeroNet)

-* Email: hello@zeronet.io (PGP: [960F FF2D 6C14 5AA6 13E8 491B 5B63 BAE6 CB96 13AE](https://zeronet.io/files/tamas@zeronet.io_pub.asc))

+* More info, help, changelog, zeronet sites: https://www.reddit.com/r/zeronetx/

+* Come, chat with us: [#zeronet @ FreeNode](https://kiwiirc.com/client/irc.freenode.net/zeronet) or on [gitter](https://gitter.im/canewsin/ZeroNet)

+* Email: canews.in@gmail.com

diff --git a/Vagrantfile b/Vagrantfile

index 24fe0c45f..10a11c58a 100644

--- a/Vagrantfile

+++ b/Vagrantfile

@@ -40,6 +40,6 @@ Vagrant.configure(VAGRANTFILE_API_VERSION) do |config|

config.vm.provision "shell",

inline: "sudo apt-get install msgpack-python python-gevent python-pip python-dev -y"

config.vm.provision "shell",

- inline: "sudo pip install msgpack --upgrade"

+ inline: "sudo pip install -r requirements.txt --upgrade"

end

diff --git a/plugins b/plugins

new file mode 160000

index 000000000..689d9309f

--- /dev/null

+++ b/plugins

@@ -0,0 +1 @@

+Subproject commit 689d9309f73371f4681191b125ec3f2e14075eeb