|

| 1 | +# Single node deployment |

| 2 | + |

| 3 | +_WARNING: you are on the master branch, please refer to the docs on the branch that matches your `cortex version`_ |

| 4 | + |

| 5 | +You can use Cortex to deploy models on a single node. Deploying to a single node can be cheaper than spinning up a Cortex cluster with 1 worker node. It also may be useful for testing your model on a GPU if you don't have access to one locally. |

| 6 | + |

| 7 | +Deploying on a single node entails `ssh`ing into that instance and running Cortex locally. When using this approach, you won't get the the advantages of deploying to a cluster such as autoscaling, rolling updates, etc. |

| 8 | + |

| 9 | +We've included the step-by-step instructions for AWS, although the process should be similar for any other cloud provider. |

| 10 | + |

| 11 | +## AWS |

| 12 | + |

| 13 | +### Step 1 |

| 14 | + |

| 15 | +Navigate to the [EC2 dashboard](https://console.aws.amazon.com/ec2/home) in your AWS Web Console and click "Launch instance". |

| 16 | + |

| 17 | + |

| 18 | + |

| 19 | +### Step 2 |

| 20 | + |

| 21 | +Choose a Linux based AMI instance. We recommend using "Ubuntu Server 18.04 LTS". If you plan to serve models on a GPU, we recommend using "Deep Learning AMI (Ubuntu 18.04)" because it comes with Docker Engine and NVIDIA pre-installed. |

| 22 | + |

| 23 | + |

| 24 | + |

| 25 | +### Step 3 |

| 26 | + |

| 27 | +Choose your desired instance type (it should have enough CPU and Memory to run your model). Typically it is a good idea have at least 1 GB of memory to spare for your operating system and any other processes that you might want to run on the instance. To run most Cortex examples, an m5.large instance is sufficient. |

| 28 | + |

| 29 | +Selecting an appropriate GPU instance depends on the kind of GPU card you want. Different GPU instance families have different GPU cards (i.e. g4 family uses NVIDIA T4 while the p2 family uses NVIDIA K80). For typical GPU use cases, g4dn.xlarge is one of the cheaper instances that should be able to serve most large models, including deep learning models such as GPT-2. |

| 30 | + |

| 31 | +Once you've chosen your instance click "Next: Configure instance details". |

| 32 | + |

| 33 | + |

| 34 | + |

| 35 | +### Step 4 |

| 36 | + |

| 37 | +To make things easy for testing and development, we should make sure that the EC2 instance has a public IP address. Then click "Next: Add Storage". |

| 38 | + |

| 39 | + |

| 40 | + |

| 41 | +### Step 5 |

| 42 | + |

| 43 | +We recommend having at least 50 GB of storage to save your models to disk and to download the docker images needed to serve your model. Then click "Next: Add Tags". |

| 44 | + |

| 45 | + |

| 46 | + |

| 47 | +### Step 6 |

| 48 | + |

| 49 | +Adding tags is optional. You can add tags to your instance to improve searchability. Then click "Next: Configure Security Group". |

| 50 | + |

| 51 | +### Step 7 |

| 52 | + |

| 53 | +Configure your security group to allow inbound traffic to the `local_port` number you specified in your `cortex.yaml` (the default is 8888 if not specified). Exposing this port allows you to make requests to your API but it also exposes it to the world so be careful. Then click "Next: Review and Launch". |

| 54 | + |

| 55 | + |

| 56 | + |

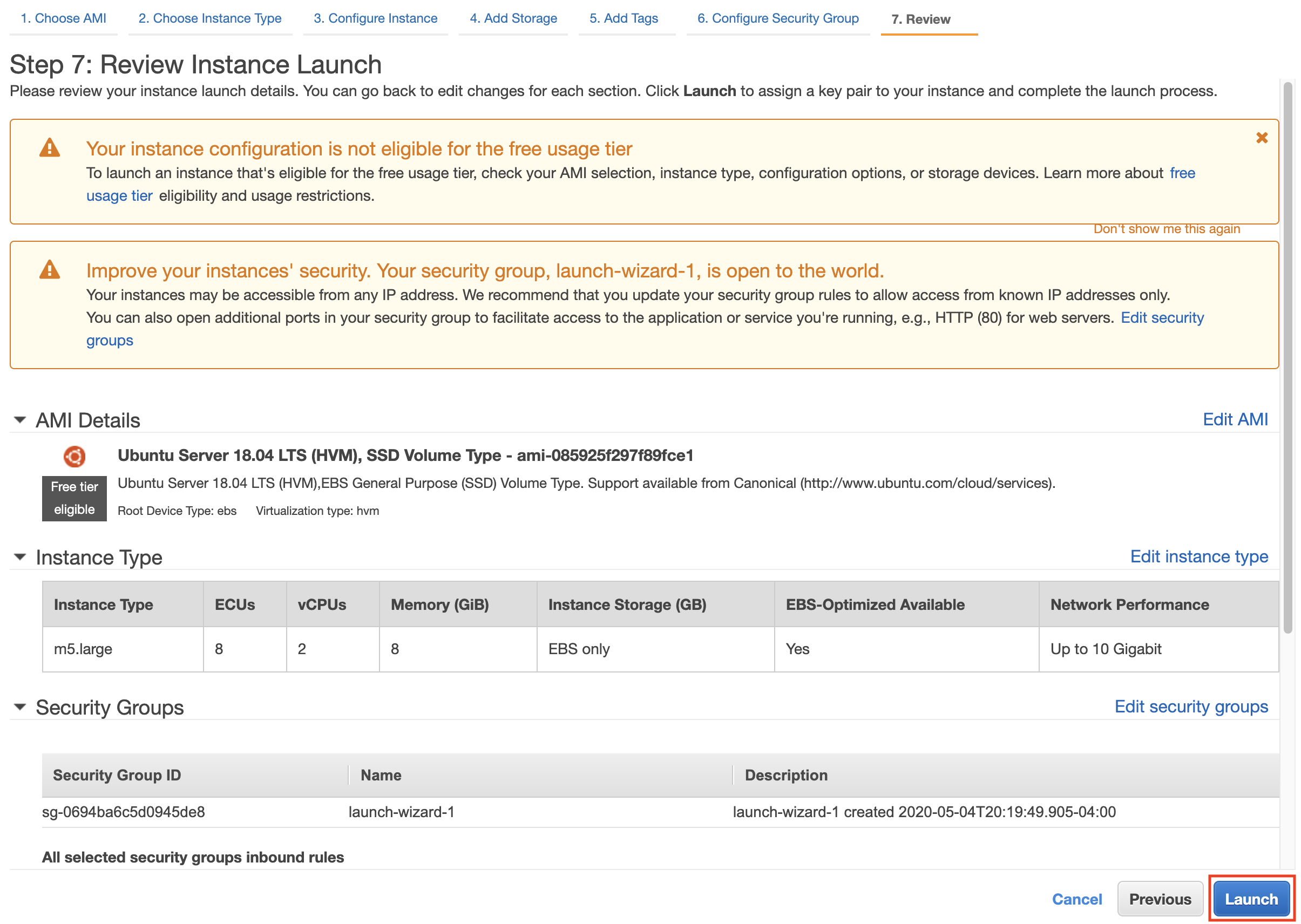

| 57 | +### Step 8 |

| 58 | + |

| 59 | +Double check details such as instance type, CPU, Memory, and exposed ports. Then click "Launch". |

| 60 | + |

| 61 | + |

| 62 | + |

| 63 | +### Step 9 |

| 64 | + |

| 65 | +You will be prompted to select a key pair, which is used to connect to your instance. Choose a key pair that you have access to. If you don't have one, you can create one and it will be downloaded to your browser's downloads folder. Then click "Launch Instances". |

| 66 | + |

| 67 | + |

| 68 | + |

| 69 | +### Step 10 |

| 70 | + |

| 71 | +Once your instance is running, follow the [relevant instructions](https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/AccessingInstances.html) to connect to your instance. |

| 72 | + |

| 73 | +If you are using a Mac or a Linux based OS, these instructions can help you ssh into your instance: |

| 74 | + |

| 75 | +```bash |

| 76 | +# make sure you have .ssh folder |

| 77 | +$ mkdir -p ~/.ssh |

| 78 | + |

| 79 | +# if your key was downloaded to your Downloads folder, move it to your .ssh folder |

| 80 | +$ mv ~/Downloads/cortex-node.pem ~/.ssh/ |

| 81 | + |

| 82 | +# modify your key's permissions |

| 83 | +$ chmod 400 ~/.ssh/cortex-node.pem |

| 84 | + |

| 85 | +# get your instance's public DNS and then ssh into it |

| 86 | +$ ssh -i "cortex-node.pem" [email protected] |

| 87 | +``` |

| 88 | + |

| 89 | + |

| 90 | + |

| 91 | +### Step 11 |

| 92 | + |

| 93 | +Docker Engine needs to be installed on your instance before you can use Cortex. Skip to Step 12 if you are using the "Deep Learning AMI" because Docker Engine is already installed. Otherwise, follow these [instructions](https://docs.docker.com/engine/install/ubuntu/#install-using-the-repository) to install Docker Engine. |

| 94 | + |

| 95 | +Once Docker Engine is installed, enable the Docker commands to be used without `sudo`: |

| 96 | + |

| 97 | +```bash |

| 98 | +$ sudo groupadd docker; sudo gpasswd -a $USER docker |

| 99 | + |

| 100 | +# you must log out and back in for the permission changes to be effective |

| 101 | +$ logout |

| 102 | +``` |

| 103 | + |

| 104 | +If you have installed Docker correctly, you should be able to run docker commands such as `docker run hello-world` without running into permission issues or needing `sudo`. |

| 105 | + |

| 106 | +### Step 12 |

| 107 | + |

| 108 | +Install the Cortex CLI. |

| 109 | + |

| 110 | +<!-- CORTEX_VERSION_MINOR --> |

| 111 | +```bash |

| 112 | +$ bash -c "$(curl -sS https://raw.githubusercontent.com/cortexlabs/cortex/master/get-cli.sh)" |

| 113 | +``` |

| 114 | + |

| 115 | +### Step 13 |

| 116 | + |

| 117 | +You can now use Cortex to deploy your model: |

| 118 | + |

| 119 | +```bash |

| 120 | +$ git clone https://github.com/cortexlabs/cortex.git |

| 121 | + |

| 122 | +$ cd cortex/examples/tensorflow/iris-classifier |

| 123 | + |

| 124 | +$ cortex deploy |

| 125 | + |

| 126 | +# take note of the curl command |

| 127 | +$ cortex get iris-classifier |

| 128 | +``` |

| 129 | + |

| 130 | +### Step 14 |

| 131 | + |

| 132 | +Make requests by replacing "localhost" in the curl command with your instance's public DNS: |

| 133 | + |

| 134 | +```bash |

| 135 | +$ curl -X POST -H "Content-Type: application/json" \ |

| 136 | + -d '{ "sepal_length": 5.2, "sepal_width": 3.6, "petal_length": 1.4, "petal_width": 0.3 }' \ |

| 137 | + <instance public DNS>:<Port> |

| 138 | +``` |

0 commit comments