We are developing it with reference to [Detecting Invisible People] & [MegaDepth] & [Deep Sort]

The code skeleton is based on "https://github.com/tarashakhurana/detecting-invisible-people"

- create a conda environment (name : deepsort)

conda env create -f detecting-invisible-people/environment.yml- The code expects the directory structure of your dataset in the MOT17 data format

MOT17/

-- train/

---- seq_01/

------ img1/ /* necessary */

------ img1Depth/ /* Can generate by using MegaDepth */

------ gt/gt.txt /* necessary */

------ det/det.txt /* necessary */

------ seqinfo.ini

...

-- test/

---- seq_02/

------ img1/ /* necessary */

------ img1Depth/ /* Can generate by using MegaDepth */

------ det/det.txt /* necessary */

------ seqinfo.ini

...

resources/

-- detections/

---- seq_01.npy /* Can generate by using ./tools/generate_detection.py */

-- networks/

---- mars_###.pb /* Can generate by using cosine_metric_learning */

-

If you want to use custom datasets, see below for references.

-

seqinfo.ini

[Sequence] name=MOT17-02-FRCNN /* Name of dataset directory */ imDir=img1 /* Name of Imageset directory, It's better to fix 'img1' */ frameRate=30 seqLength=600 /* Number of frame */ imWidth=1920 imHeight=1080 imExt=.jpg -

Form of Imageset

image name : 6 digit frame number starting with 1 e.g.) 000001.jpg ~ 000600.jpg

-

Part of the gt.txt in MOT17

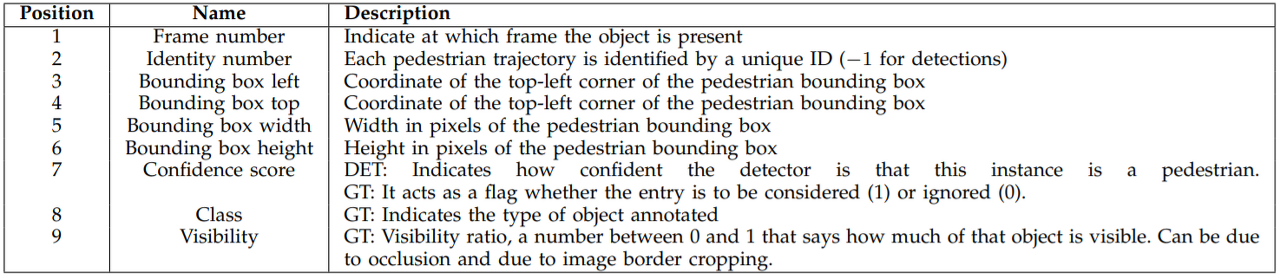

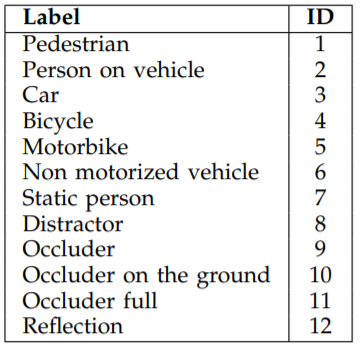

599,51,910,408,26,129,0,9,0.046154 600,51,910,408,26,129,0,9,0.046154 1,52,730,509,37,60,0,4,0.92105 2,52,730,509,37,60,0,4,0.94737The gt.txt format (Each line must contain 9 values)

Part of the det.txt in MOT17

436,-1,696.2,429.5,72.8,285.6,0.996 436,-1,528.8,466.7,24.2,71.6,0.306 294,-1,752.6,445,65.1,198,1 294,-1,1517.6,430.2,241.1,461.2,1The det.txt format (Each line must contain 7 values)

frame id, default(-1), x, y, width, height, confidence score -

Using ./tools/generate_detection.py

python tools/generate_detections.py \ --model=resources/networks/mars-0000.pb \ --mot_dir=./MOT17/train \ --output_dir=./resources/detections/MOT17_traingenerate mars-0000.pb by using Cosine Metric Learning

-

Using megadepth, Fix the lines 134 in MegaDepth/demo_images_new.py

images = sorted(glob.glob( " path of img1/*.jpg " ))Generate image_depth sets

python MegaDepth/demo_images_new.py

-

bash run_forecast_filtering.sh

-

pretrained model in here

- prepare the gt.txt & image sets

file format : [#ID][#tracklets][#bboxes][#distractors][#caml./ID]

folder format : object classfile info : extracted bbox_images

By using the makedet.py, we can extract datasets from image sets